Divergence in Deep Q-Learning: Two Tricks Are Better Than (N)one

On

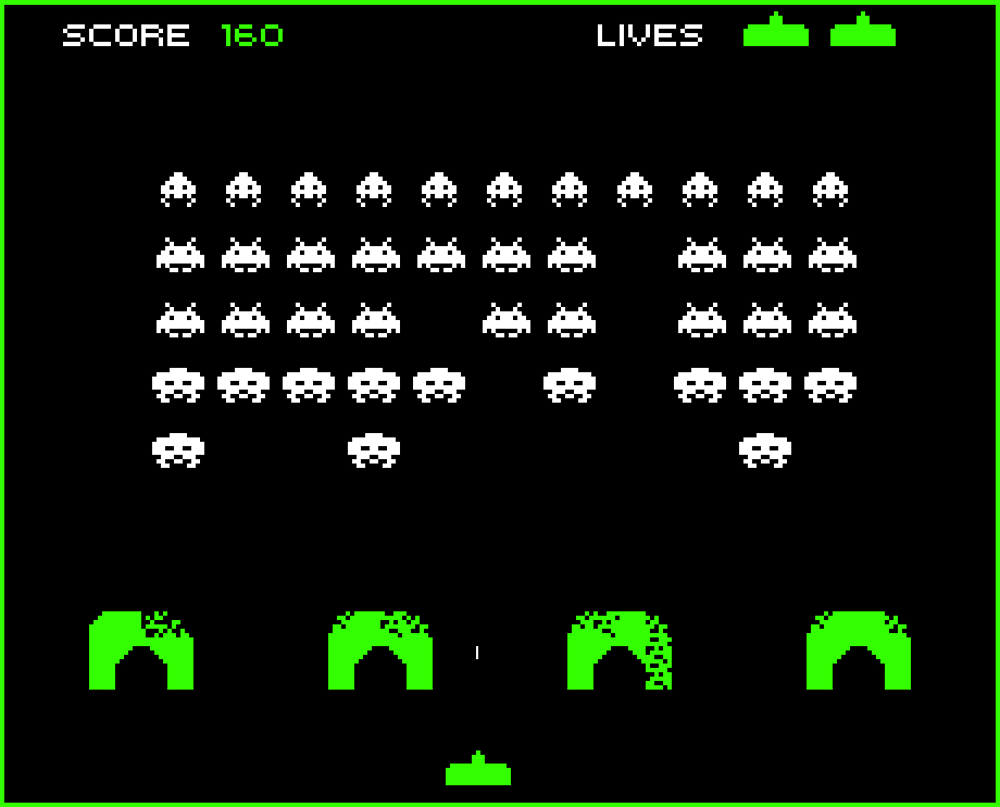

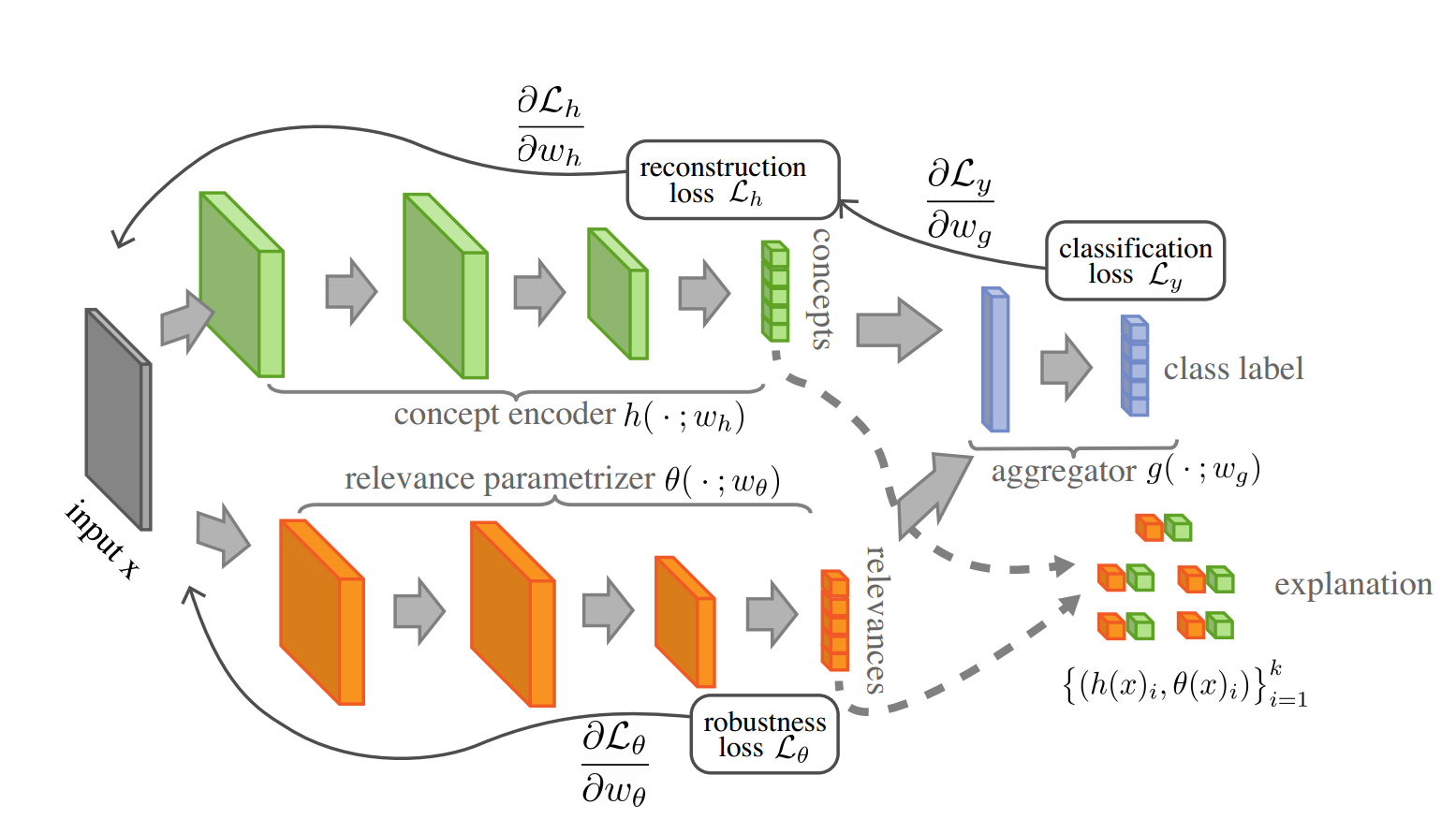

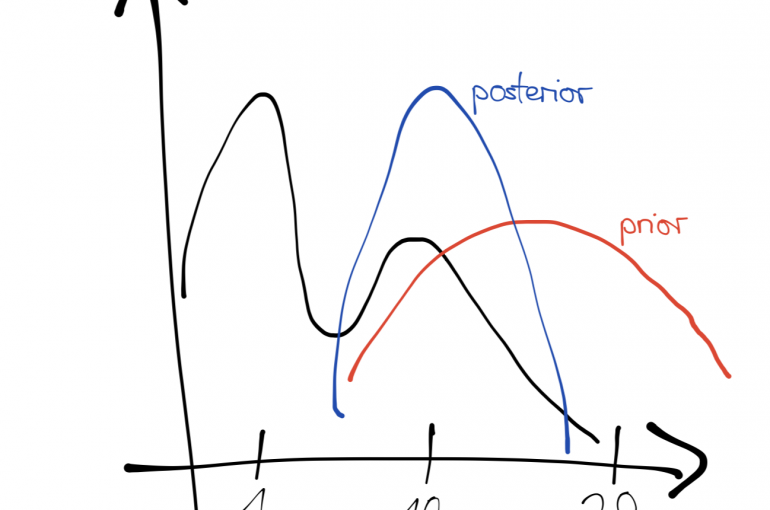

By Emil Dudev, Aman Hussain, Omar Elbaghdadi, and Ivan Bardarov. Deep Q Networks (DQN) revolutionized the Reinforcement Learning world. It was the first algorithm able to learn a successful strategy in a complex environment immediately from high-dimensional image inputs. In this blog post, we investigate how some of the techniques...